The organizations¶

The Bureau of Ocean Energy Management and the National Marine Fisheries Service (NOAA Fisheries) are responsible for management and conservation of the United States' marine ecosystems. This includes monitoring Cook Inlet belugas, an endangered population of now fewer than 300 whales.

The challenge¶

In order to track the health of the Cook Inlet belugas, NOAA Fisheries conducts annual aerial photographic surveys when the whales collect around river mouths in the spring and summer months near Anchorage, Alaska. The photographs are then reviewed to identify individual whales based on their color, marks, scarring, and other physical features. This process is called photo-identification—a noninvasive technique for identifying and tracking individuals of a wild animal population over time.

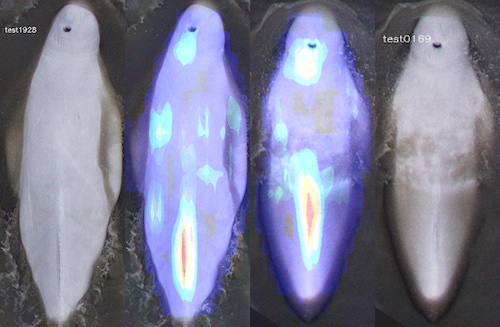

Manual photo-identification is a slow and labor-intensive process. Given a new photo of an individual whale, reviewers need to carefully consider subtle physical features against those from a large database of past photos in order to determine which individual the new photo matches. A tool for making this process more efficient must not only handle this difficult computer vision challenge to accurately surface likely matches, but also must be interpretable so that the reviewer can quickly decide on which candidate photo is the right match.

The approach¶

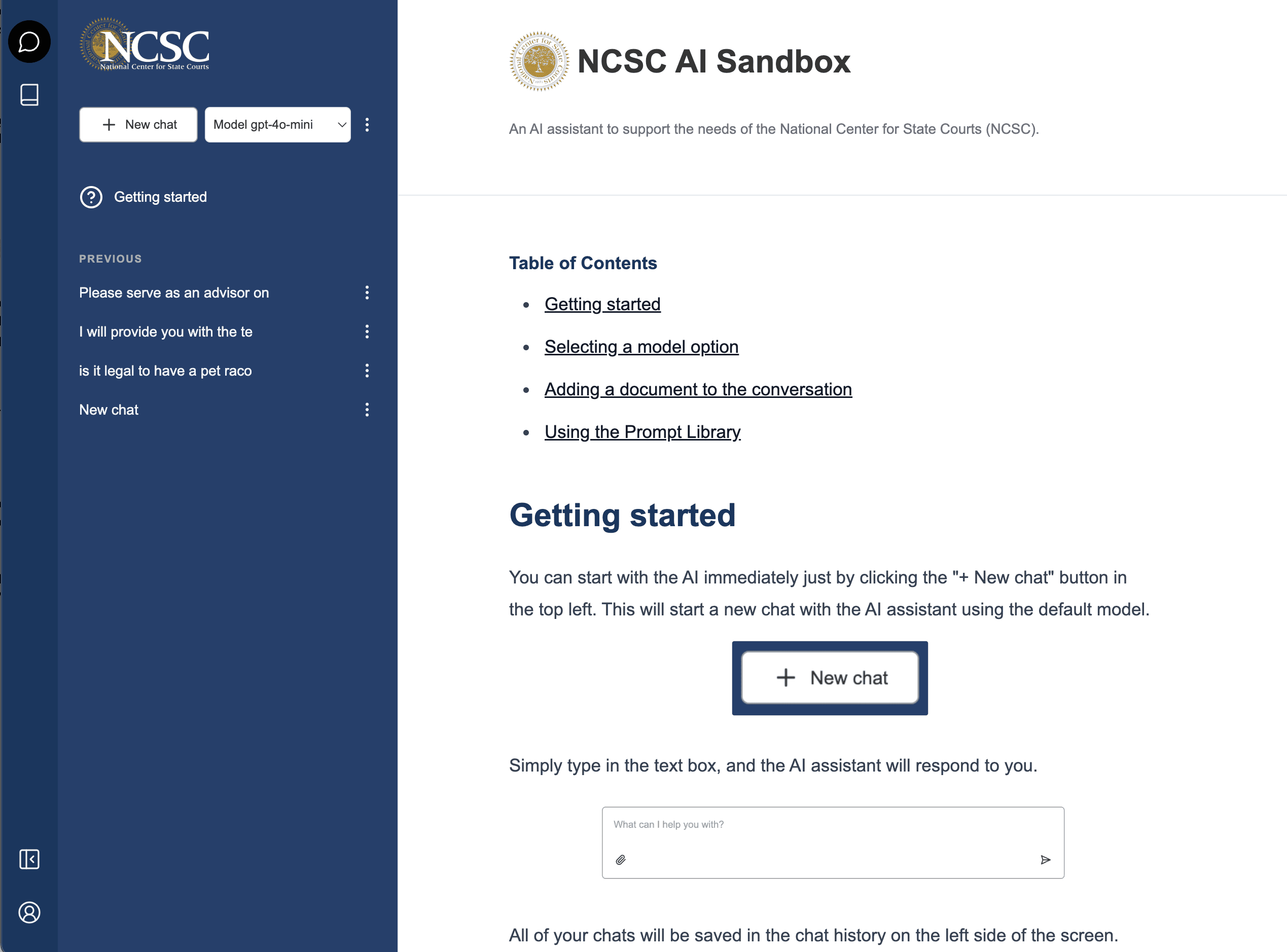

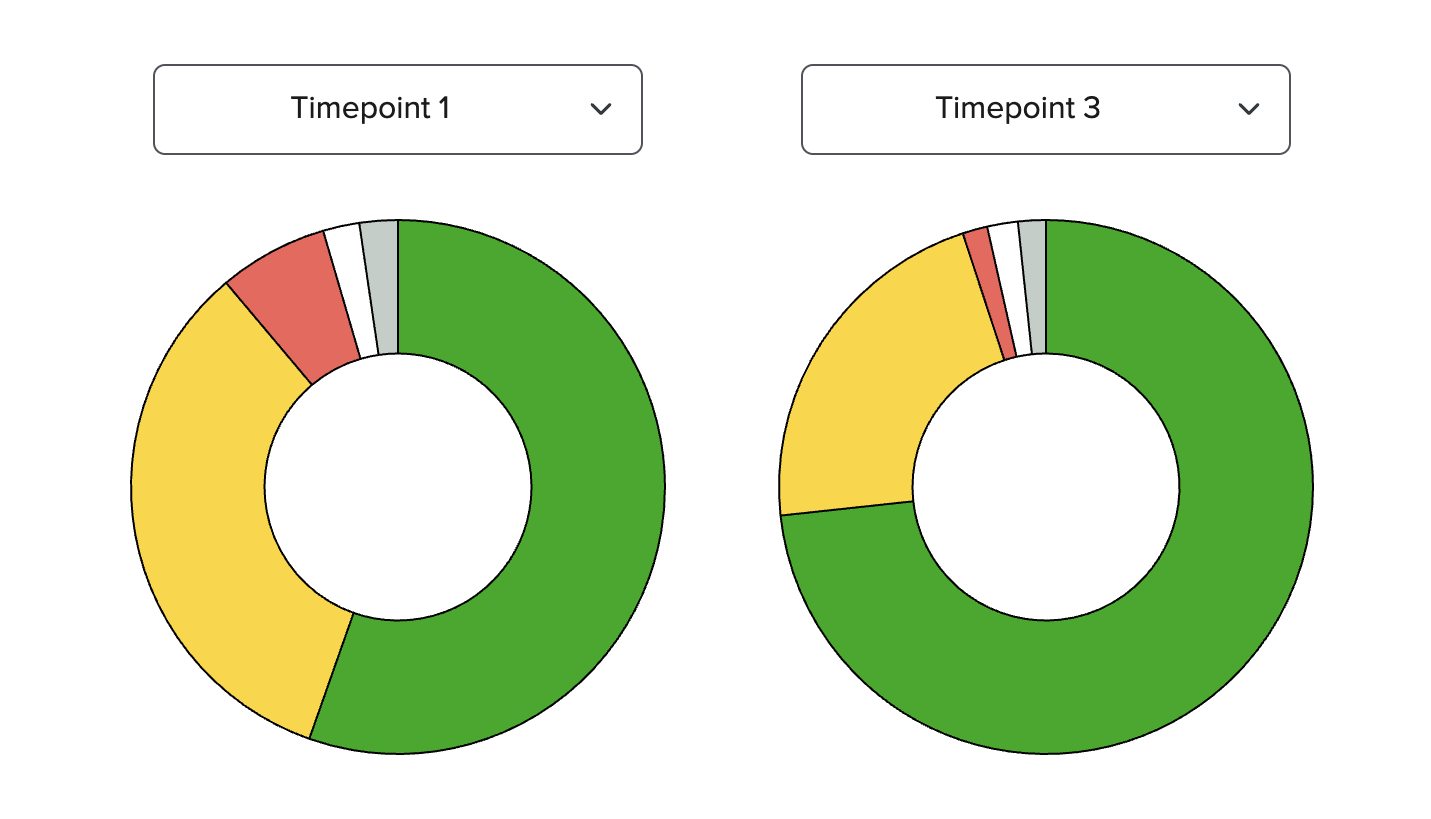

DrivenData hosted the Where's Whale-do? challenge to rapidly experiment with different approaches to applying computer vision for this problem. Our data scientists worked closely with wildlife computer vision experts from Wild Me, the organization behind the data platform Flukebook that NOAA Fisheries uses, to design a sophisticated evaluation procedure that considered ten different scenarios of query and database image setups to ensure solutions would be robust and generalizable. The challenge also featured an explainability bonus round where top performers were invited to submit model explainability solutions for their models.

The results¶

The challenge received over 1,000 submissions over the course of two months. The winning solutions combined performant machine learning models with training techniques that had previous reported success in facial recognition tasks. Further analysis by challenge partners at Wild Me found that winners outperformed previous state-of-the-art solutions by 15-20% across key accuracy metrics.

Following the challenge, engineers at Wild Me adapted the techniques from the winning solutions into a new algorithm MIEW-ID, which they incorporated into the image analysis pipeline of their wildlife data platform software. The new functionality includes explanatory visualizations that show users the parts of the image the model considered influential, using the Grad-CAM technique that was produced by the challenge's visualization bonus track.

The impact has not been limited to conservation of beluga whales. The resulting system has been successfully cross-applied to a dozen other cetacean species like bottlenose dolphins, and has even seen success with terrestrial species like African lions and African leopards.